How it's Going: Adding NV12 support to VKMS

This work proposed by my mentor Maíra Canal is going more difficult than I thought >W<.

On the Community Bonding Period of GSoC, Maíra proposed I work on VKMS. While looking on the TODO list of the driver, on the plane feature section, I found the item “Additional buffer formats, especially YUV formats for video like NV12” interesting to work on, as it has some correlation with my work on the GSoC.

Before I start talking about what was done I think this blog post needs more context.

What is VKMS?

The Virtual Kernel Mode Setting is a virtual video driver. It is used for testing the correct display of userspace programs without needing a physical monitor. Virtual drivers are useful for automated testing.

And NV12?

NV12 is a multi-planar color format that uses the YCbCr color model with Chroma subsampling. This explanation doesn’t say much for the first reader, so let’s explain it further.

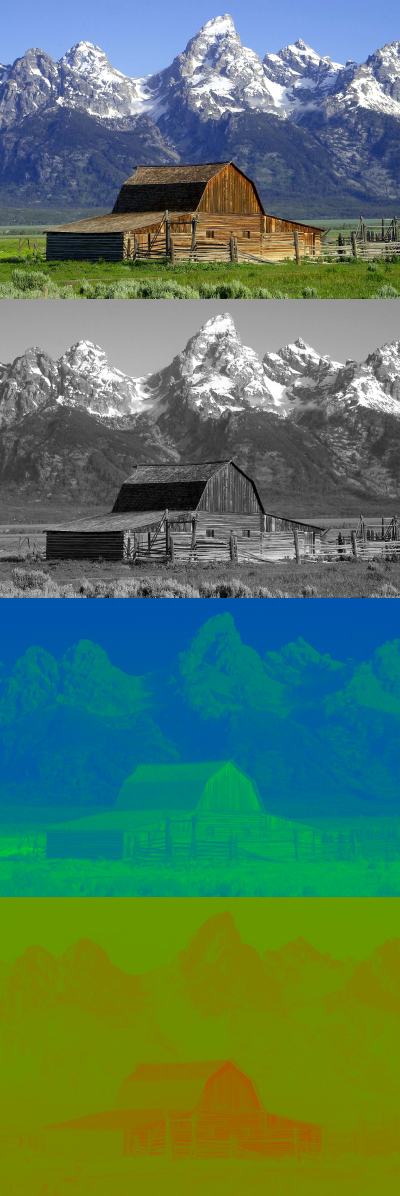

The YCbCr, like RGB, is a form to represent color on the computer memory. It’s divided into three components:

- Y: Luma, the brightness that a color has.

- Cb: Blue difference chroma, difference from the blue component of RGB

- Cr: Red difference chroma, difference from the red component of RGB

This color model is better for compression because it separates the brightness from the color. Humans perceive more changes in brightness than in color, so we can have less information about color in an image and not perceive the difference in loss of detail. So some formats have the same chroma (Cb, Cr) components for multiple pixels. This technique is called Chroma subsampling.

The NV12 has the same color components for 2 pixels on the horizontal and 2 pixels on the vertical. It achieves that by having two areas of memory called planes, one only for the luminance component and the other for the two colors components.

A format that separates its components into multiple planes is called a multi-planar format. More specifically, a format with two planes is called a semi-planar format, and one with three planes is called a planar format.

Y Plane Cb Cr Plane Formed Pixels

Y00 Y01 Cb0 Cr0 Y00Cb0Cr0 Y01Cb0Cr0

Y02 Y03 + Cb1 Cr1 ----> Y02Cb0Cr0 Y03Cb0Cr0

Y10 Y11 Y10Cb1Cr1 Y11Cb1Cr1

Y12 Y13 Y12Cb1Cr1 Y13Cb1Cr1

Each Yij value uses the correspondent Cbi an Cri values.

What Needs to Be Done?

Currently, the VKMS supports none of the features above. So the task is subdivided into this order:

- Multi-planar support

- Subsampling support

- YCbCr <-> RGB conversion

- NV12 support

I think you can see why this work is harder than I thought. I knew none of that information beforehand, so learning all of that was an adventure :D.

I have implemented all those things, but it still needs a little thinking to get all working together.

Multi-planar Support

The VKMS only expects drm_framebuffer’s to have a single

plane. The first thing to do is to remove the offset, pitch, and cpp

variables from the vkms_frame_info and switch into using the arrays that they

were taken. With that, we can access the information for every plane in a

drm_framebuffer, not just the first one.

struct vkms_frame_info {

struct drm_rect rotated;

struct iosys_map map[DRM_FORMAT_MAX_PLANES];

unsigned int rotation;

- unsigned int offset;

- unsigned int pitch;

- unsigned int cpp;

};

After that, we need the ability to choose the plane and use its own cpp,

pitch, and offset. To do that, we need to pass an index to the functions

that access the color information and use it on the new arrays.

-static size_t pixel_offset(const struct vkms_frame_info *frame_info, int x, int y)

+static size_t pixel_offset(const struct vkms_frame_info *frame_info, int x, int y, size_t index)

{

struct drm_framebuffer *fb = frame_info->fb;

- return fb->offsets[0] + (y * fb->pitches[0])

- + (x * fb->format->cpp[0]);

+ return fb->offsets[index] + (y * fb->pitches[index])

+ + (x * fb->format->cpp[index]);

}

static void *packed_pixels_addr(const struct vkms_frame_info *frame_info,

- int x, int y)

+ int x, int y, size_t index)

{

- size_t offset = pixel_offset(frame_info, x, y);

+ size_t offset = pixel_offset(frame_info, x, y, index);

return (u8 *)frame_info->map[0].vaddr + offset;

}

Subsampling Support

The drm_format_info has the hsub and vsub variables

that dictate the subsampling factor horizontally and vertically (for [NV12 hsub

= vsub = 2][nv12-fourcc.h]). So we need to take this into account when accessing

the color information of a pixel. Note that this is not to be done on the first

plane, as for all formats the subsampling is not present on it.

@@ -238,8 +238,10 @@ static void get_src_pixels_per_plane(const struct vkms_frame_info *frame_info,

{

const struct drm_format_info *frame_format = frame_info->fb->format;

- for (size_t i = 0; i < frame_format->num_planes; i++)

- src_pixels[i] = get_packed_src_addr(frame_info, y, i);

+ for (size_t i = 0; i < frame_format->num_planes; i++){

+ int vsub = i ? frame_format->vsub : 1;

+ src_pixels[i] = get_packed_src_addr(frame_info, y / vsub, i);

+ }

}

void vkms_compose_row(struct line_buffer *stage_buffer, struct vkms_plane_state *plane, int y)

@@ -250,6 +252,8 @@ void vkms_compose_row(struct line_buffer *stage_buffer, struct vkms_plane_state

int limit = min_t(size_t, drm_rect_width(&frame_info->dst), stage_buffer->n_pixels);

u8 *src_pixels[DRM_FORMAT_MAX_PLANES];

+ int hsub_count = 0;

+

enum drm_color_encoding encoding = plane->base.base.color_encoding;

enum drm_color_range range = plane->base.base.color_range;

@@ -258,17 +262,21 @@ void vkms_compose_row(struct line_buffer *stage_buffer, struct vkms_plane_state

for (size_t x = 0; x < limit; x++) {

int x_pos = get_x_position(frame_info, limit, x);

+ hsub_count = (hsub_count + 1) % frame_format->hsub;

+

if (drm_rotation_90_or_270(frame_info->rotation)) {

+ get_src_pixels_per_plane(frame_info, src_pixels, x + frame_info->rotated.y1);

for (size_t i = 0; i < frame_format->num_planes; i++)

- src_pixels[i] = get_packed_src_addr(frame_info,

- x + frame_info->rotated.y1, i) +

- frame_format->cpp[i] * y;

+ if (!i || !hsub_count)

+ src_pixels[i] += frame_format->cpp[i] * y;

}

plane->pixel_read(src_pixels, &out_pixels[x_pos], encoding, range);

- for (size_t i = 0; i < frame_format->num_planes; i++)

- src_pixels[i] += frame_format->cpp[i];

+ for (size_t i = 0; i < frame_format->num_planes; i++) {

+ if (!i || !hsub_count)

+ src_pixels[i] += frame_format->cpp[i];

+ }

}

}

YCbCr to RGB conversion

This was, by far, the most difficult part of the project.

The Color YCbCr has three color encoding standards, BT601, BT709, and BT2020, besides that the YCbCr can occupy the full range of each byte it uses or a limited range.

To tell what color encoding and range the driver support, we have to add the

drm_plane_create_color_properties()

@@ -212,5 +212,14 @@ struct vkms_plane *vkms_plane_init(struct vkms_device *vkmsdev,

drm_plane_create_rotation_property(&plane->base, DRM_MODE_ROTATE_0,

DRM_MODE_ROTATE_MASK | DRM_MODE_REFLECT_MASK);

+ drm_plane_create_color_properties(&plane->base,

+ BIT(DRM_COLOR_YCBCR_BT601) |

+ BIT(DRM_COLOR_YCBCR_BT709) |

+ BIT(DRM_COLOR_YCBCR_BT2020),

+ BIT(DRM_COLOR_YCBCR_LIMITED_RANGE) |

+ BIT(DRM_COLOR_YCBCR_FULL_RANGE),

+ DRM_COLOR_YCBCR_BT601,

+ DRM_COLOR_YCBCR_FULL_RANGE);

+

return plane;

}

The conversion code was taken from the tpg-core.c, a virtual

driver from the media subsystem that does those conversions in software as well.

As a side thought, maybe would be better to have those two subsystems use the same code, maybe with a separate subsystem that handles color formats.

The TPG code was changed to use the drm_fixed.h operations, to be

more precise and coherent.

struct pixel_yuv_u8 {

u8 y, u, v;

};

static void ycbcr2rgb(const s64 m[3][3], int y, int cb, int cr,

int y_offset, int *r, int *g, int *b)

{

s64 fp_y; s64 fp_cb; s64 fp_cr;

s64 fp_r; s64 fp_g; s64 fp_b;

y -= y_offset;

cb -= 128;

cr -= 128;

fp_y = drm_int2fixp(y);

fp_cb = drm_int2fixp(cb);

fp_cr = drm_int2fixp(cr);

fp_r = drm_fixp_mul(m[0][0], fp_y) +

drm_fixp_mul(m[0][1], fp_cb) +

drm_fixp_mul(m[0][2], fp_cr);

fp_g = drm_fixp_mul(m[1][0], fp_y) +

drm_fixp_mul(m[1][1], fp_cb) +

drm_fixp_mul(m[1][2], fp_cr);

fp_b = drm_fixp_mul(m[2][0], fp_y) +

drm_fixp_mul(m[2][1], fp_cb) +

drm_fixp_mul(m[2][2], fp_cr);

*r = drm_fixp2int(fp_r);

*g = drm_fixp2int(fp_g);

*b = drm_fixp2int(fp_b);

}

static void yuv_u8_to_argb_u16(struct pixel_argb_u16 *argb_u16, struct pixel_yuv_u8 *yuv_u8,

enum drm_color_encoding encoding, enum drm_color_range range)

{

#define COEFF(v, r) (\

drm_fixp_div(drm_fixp_mul(drm_fixp_from_fraction(v, 10000), drm_int2fixp((1 << 16) - 1)),\

drm_int2fixp(r)) \

)\

const s64 bt601[3][3] = {

{ COEFF(10000, 219), COEFF(0, 224), COEFF(14020, 224) },

{ COEFF(10000, 219), COEFF(-3441, 224), COEFF(-7141, 224) },

{ COEFF(10000, 219), COEFF(17720, 224), COEFF(0, 224) },

};

const s64 bt601_full[3][3] = {

{ COEFF(10000, 255), COEFF(0, 255), COEFF(14020, 255) },

{ COEFF(10000, 255), COEFF(-3441, 255), COEFF(-7141, 255) },

{ COEFF(10000, 255), COEFF(17720, 255), COEFF(0, 255) },

};

const s64 rec709[3][3] = {

{ COEFF(10000, 219), COEFF(0, 224), COEFF(15748, 224) },

{ COEFF(10000, 219), COEFF(-1873, 224), COEFF(-4681, 224) },

{ COEFF(10000, 219), COEFF(18556, 224), COEFF(0, 224) },

};

const s64 rec709_full[3][3] = {

{ COEFF(10000, 255), COEFF(0, 255), COEFF(15748, 255) },

{ COEFF(10000, 255), COEFF(-1873, 255), COEFF(-4681, 255) },

{ COEFF(10000, 255), COEFF(18556, 255), COEFF(0, 255) },

};

const s64 bt2020[3][3] = {

{ COEFF(10000, 219), COEFF(0, 224), COEFF(14746, 224) },

{ COEFF(10000, 219), COEFF(-1646, 224), COEFF(-5714, 224) },

{ COEFF(10000, 219), COEFF(18814, 224), COEFF(0, 224) },

};

const s64 bt2020_full[3][3] = {

{ COEFF(10000, 255), COEFF(0, 255), COEFF(14746, 255) },

{ COEFF(10000, 255), COEFF(-1646, 255), COEFF(-5714, 255) },

{ COEFF(10000, 255), COEFF(18814, 255), COEFF(0, 255) },

};

int r = 0;

int g = 0;

int b = 0;

bool full = range == DRM_COLOR_YCBCR_FULL_RANGE;

unsigned int y_offset = full ? 0 : 16;

switch (encoding) {

case DRM_COLOR_YCBCR_BT601:

ycbcr2rgb(full ? bt601_full : bt601,

yuv_u8->y, yuv_u8->u, yuv_u8->v, y_offset, &r, &g, &b);

break;

case DRM_COLOR_YCBCR_BT709:

ycbcr2rgb(full ? rec709_full : rec709,

yuv_u8->y, yuv_u8->u, yuv_u8->v, y_offset, &r, &g, &b);

break;

case DRM_COLOR_YCBCR_BT2020:

ycbcr2rgb(full ? bt2020_full : bt2020,

yuv_u8->y, yuv_u8->u, yuv_u8->v, y_offset, &r, &g, &b);

break;

default:

pr_warn_once("Not supported color encoding\n");

break;

}

argb_u16->r = clamp(r, 0, 0xffff);

argb_u16->g = clamp(g, 0, 0xffff);

argb_u16->b = clamp(b, 0, 0xffff);

}

NV12 support

After all that, we can finally create the NV12 conversion function. We need to access the YCbCr values in the form of the NV12.

static void NV12_to_argb_u16(u8 **src_pixels, struct pixel_argb_u16 *out_pixel,

enum drm_color_encoding encoding, enum drm_color_range range)

{

struct pixel_yuv_u8 yuv_u8;

yuv_u8.y = src_pixels[0][0];

yuv_u8.u = src_pixels[1][0];

yuv_u8.v = src_pixels[1][1];

yuv_u8_to_argb_u16(out_pixel, &yuv_u8, encoding, range);

}

Is it Done?

After all this work you think that all worked, right? Well, IGT GPU Tools, says the opposite.

[root@archlinux shared]# ./build/tests/kms_plane --run pixel-format

IGT-Version: 1.27.1-g4637d2285 (x86_64) (Linux: 6.4.0-rc1-VKMS-DEVEL+ x86_64)

Opened device: /dev/dri/card0

Starting subtest: pixel-format

Starting dynamic subtest: pipe-A-planes

Using (pipe A + Virtual-1) to run the subtest.

Testing format XR24(0x34325258) / modifier linear(0x0) on A.0

Testing format AR24(0x34325241) / modifier linear(0x0) on A.0

Testing format XR48(0x38345258) / modifier linear(0x0) on A.0

Testing format AR48(0x38345241) / modifier linear(0x0) on A.0

Testing format RG16(0x36314752) / modifier linear(0x0) on A.0

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.601 YCbCr, YCbCr limited range) on A.0

(kms_plane:403) WARNING: CRC mismatches with format NV12(0x3231564e) on A.0 with 3/4 solid colors tested (0xD)

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.601 YCbCr, YCbCr full range) on A.0

(kms_plane:403) WARNING: CRC mismatches with format NV12(0x3231564e) on A.0 with 2/4 solid colors tested (0xC)

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.709 YCbCr, YCbCr limited range) on A.0

(kms_plane:403) WARNING: CRC mismatches with format NV12(0x3231564e) on A.0 with 3/4 solid colors tested (0xD)

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.709 YCbCr, YCbCr full range) on A.0

(kms_plane:403) WARNING: CRC mismatches with format NV12(0x3231564e) on A.0 with 2/4 solid colors tested (0xC)

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.2020 YCbCr, YCbCr limited range) on A.0

(kms_plane:403) WARNING: CRC mismatches with format NV12(0x3231564e) on A.0 with 3/4 solid colors tested (0xD)

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.2020 YCbCr, YCbCr full range) on A.0

(kms_plane:403) WARNING: CRC mismatches with format NV12(0x3231564e) on A.0 with 2/4 solid colors tested (0xC)

The subtest pixel-format from kms_plane tests all color formats supported by

a driver. It does that by creating framebuffers filled with different colors

and an image with the same color in the userspace. After that, it checks if the

CRC of the framebuffer and the userspace image are equal.

The NV12 support described until this point doesn’t work out because of the imprecision in the YCbCr to RGB conversion. I don’t know if the conversion is intrinsically imperfect, or if the use of fixed-point operations is the culprit. All I know is that certain colors are slightly off.

Luckily the IGT guys know about this issue, the way they overcome that is by just checking the MSB bits of color values, basically rounding then. They do that by passing a Gamma Look-Up Table (LUT) to the driver. But VKMS doesn’t support that.

Before that, What is a Gamma LUT?

It is a look-up table that the index’ is the color and its value is the result color.

This is the definition of a 1D table. You can have one for all the channels, so the transformation is the same for all the color channels, or one for each channel, so you can tweak each channel specifically.

There is a more complex type of LUT, a 3D LUT. But this one I don’t fully understand and it’s not needed. All I know is that you use the three color channels at the same time for the index, one for each coordinate, and the value that you get is a color. And besides that, you have to do interpolations.

Its image representation is a pretty cube :).

Let’s Implement a 1D Gamma LUT

It’s not that difficult, the DRM core does all the hard work.

You have to tell that the driver supports a LUT of a specific size, and the DRM places it for you inside the crtc_state.

@@ -290,6 +290,9 @@ int vkms_crtc_init(struct drm_device *dev, struct drm_crtc *crtc,

drm_crtc_helper_add(crtc, &vkms_crtc_helper_funcs);

+ drm_mode_crtc_set_gamma_size(crtc, VKMS_LUT_SIZE);

+ drm_crtc_enable_color_mgmt(crtc, 0, false, VKMS_LUT_SIZE);

+

spin_lock_init(&vkms_out->lock);

spin_lock_init(&vkms_out->composer_lock);

After that, you have to use it. You just have to access the framebuffer, after the transformations, and use the 1D LUT of each channel.

static void apply_lut(const struct vkms_crtc_state *crtc_state, struct line_buffer *output_buffer)

{

struct drm_color_lut *lut;

size_t lut_length;

if (!crtc_state->base.gamma_lut)

return;

lut = (struct drm_color_lut *)crtc_state->base.gamma_lut->data;

lut_length = crtc_state->base.gamma_lut->length / sizeof(*lut);

if (!lut_length)

return;

for (size_t x = 0; x < output_buffer->n_pixels; x++) {

size_t lut_r_index = output_buffer->pixels[x].r * (lut_length - 1) / 0xffff;

size_t lut_g_index = output_buffer->pixels[x].g * (lut_length - 1) / 0xffff;

size_t lut_b_index = output_buffer->pixels[x].b * (lut_length - 1) / 0xffff;

output_buffer->pixels[x].r = lut[lut_r_index].red;

output_buffer->pixels[x].g = lut[lut_g_index].green;

output_buffer->pixels[x].b = lut[lut_b_index].blue;

}

}

Now we finally have the conversion working :DDDDDDD.

[root@archlinux shared]# ./build/tests/kms_plane --run pixel-format

IGT-Version: 1.27.1-g4637d2285 (x86_64) (Linux: 6.4.0-rc1-VKMS-DEVEL+ x86_64)

Opened device: /dev/dri/card0

Starting subtest: pixel-format

Starting dynamic subtest: pipe-A-planes

Using (pipe A + Virtual-1) to run the subtest.

Testing format XR24(0x34325258) / modifier linear(0x0) on A.0

Testing format AR24(0x34325241) / modifier linear(0x0) on A.0

Testing format XR48(0x38345258) / modifier linear(0x0) on A.0

Testing format AR48(0x38345241) / modifier linear(0x0) on A.0

Testing format RG16(0x36314752) / modifier linear(0x0) on A.0

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.601 YCbCr, YCbCr limited range) on A.0

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.601 YCbCr, YCbCr full range) on A.0

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.709 YCbCr, YCbCr limited range) on A.0

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.709 YCbCr, YCbCr full range) on A.0

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.2020 YCbCr, YCbCr limited range) on A.0

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.2020 YCbCr, YCbCr full range) on A.0

Testing format AR24(0x34325241) / modifier linear(0x0) on A.1

Testing format XR24(0x34325258) / modifier linear(0x0) on A.1

Testing format XR48(0x38345258) / modifier linear(0x0) on A.1

Testing format AR48(0x38345241) / modifier linear(0x0) on A.1

Testing format RG16(0x36314752) / modifier linear(0x0) on A.1

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.601 YCbCr, YCbCr limited range) on A.1

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.601 YCbCr, YCbCr full range) on A.1

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.709 YCbCr, YCbCr limited range) on A.1

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.709 YCbCr, YCbCr full range) on A.1

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.2020 YCbCr, YCbCr limited range) on A.1

Testing format NV12(0x3231564e) / modifier linear(0x0) (ITU-R BT.2020 YCbCr, YCbCr full range) on A.1

Dynamic subtest pipe-A-planes: SUCCESS (2.586s)

Subtest pixel-format: SUCCESS (2.586s)

What is missing?

The pixel-format-clamped is still not passing, I still haven’t got the time to tackle that. I hope that all will be done after this is solved.

After this, it will be very easy to add another YCbCr format, it is just a matter of getting the color values in the way that the format stores.